南大通用自主研发的 GBase Cloud Data Warehouse(简称 GCDW)是一款基于列存储的海量分布式大规模并行处理的多实例弹性云数据仓库。适用于云上和云下环境,为用户提供海量数据的查询分析服务。本文介绍物理机和虚拟机上的使用,纯云的版本另行介绍。

所用版本非对外release版,只在部分客户使用中,本文档只是提前体验下整个流程。

目录导航

参考

GCDW元数据服务FoundationDB的集群模式配置和高可用测试

MinIO S3分布式集群搭建

产品介绍

GBase Cloud Data Warehouse 既支持本地部署(私有云下的计算与存储分离模式)也支持云上部署,具备弹性伸缩能力,可以在公有云上提供 SaaS 能力,为客户提供企业级弹性数据仓库系统,开箱即用,降低系统的复杂度和费用;在本地私有云下部署计算存储分离的集群,可以实现计算资源、存储资源的解耦,计算和存储都可以快速的弹性扩容,为用户提供数据库服务,用户通过私有云提供统一服务管理接口进行数据库的计算资源、存储资源管理。

环境准备

本文的环境为测试用,计划1台MinIO的s3服务,1台FoundationDB的元数据服务,3台GCDW的的虚拟主机。其中foundationDB复用了GCDW的一台服务器,实在我自己的笔记本内存不足啊,只能做简单测试。

- minio 10.0.2.181 redhat 8

- gcdw 10.0.2.211,10.0.2.212,10.0.2.213 redhat 7

- foundationDB 10.0.2.211(复用了)

S3服务

S3服务一般为私有云自带的,申请资源即可。本文不讨论如何搭建一个S3的集群。

本文档是我自己搭建测试用的minio单机模式,集群IP为redhat 8的10.0.2.181。

Minio的集群搭建,请参考MinIO S3分布式集群搭建

下载和安装

其中 /data/aws_s3/ 用于保存数据

[root@redhat8-3 ~]# wget https://dl.min.io/server/minio/release/linux-amd64/minio

[root@redhat8-3 ~]# mv minio /usr/local/bin/

[root@redhat8-3 ~]# chmod +x /usr/local/bin/minio

[root@redhat8-3 ~]# mkdir -p /data/aws_s3/启动服务

如下是官方文档里最简单的启动方式,没有在后台运行。

[root@redhat8-3 ~]# minio server /data/aws_s3/ --console-address ":8080"

Automatically configured API requests per node based on available memory on the system: 137

Finished loading IAM sub-system (took 0.0s of 0.0s to load data).

Status: 1 Online, 0 Offline.

API: http://10.0.2.181:9000 http://192.168.122.1:9000 http://127.0.0.1:9000

RootUser: minioadmin

RootPass: minioadmin

Console: http://10.0.2.181:8080 http://192.168.122.1:8080 http://127.0.0.1:8080

RootUser: minioadmin

RootPass: minioadmin

Command-line: https://docs.min.io/docs/minio-client-quickstart-guide

$ mc alias set myminio http://10.0.2.181:9000 minioadmin minioadmin

Documentation: https://docs.min.io配置

创建gcdw用的s3账号

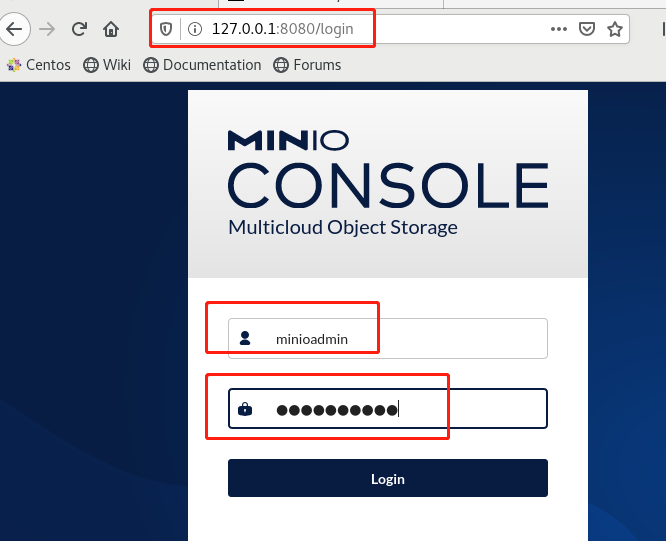

打开浏览器http://10.0.2.181:8080,用默认的用户名和密码登录, 参加前面输出的RootUser和RootPass。

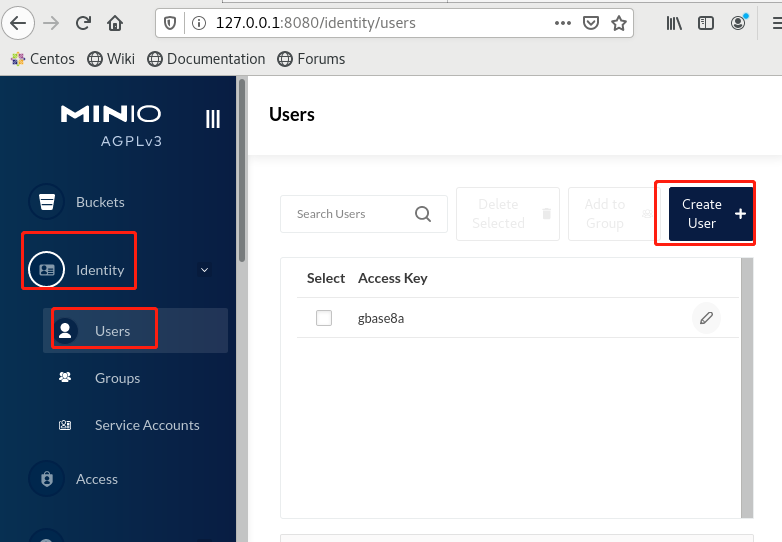

选择左侧的的Identify -> User -> CreateUser

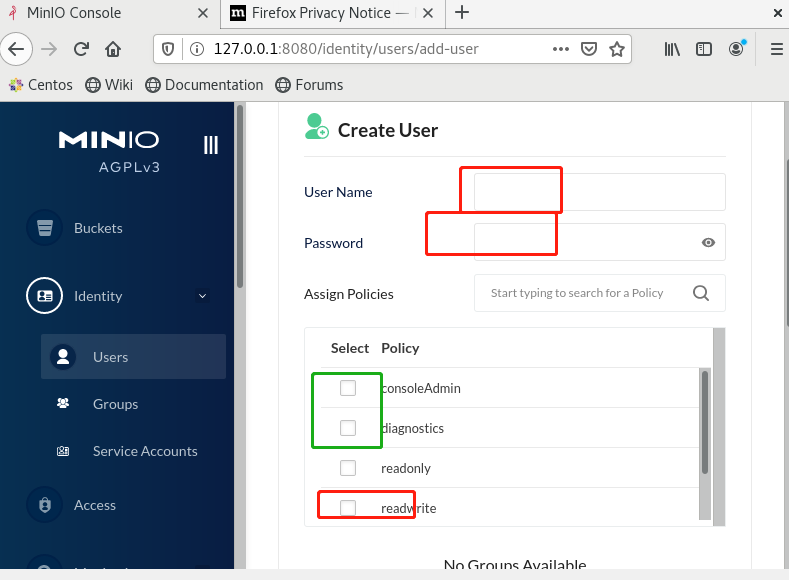

填写用户名和密码,下面的策略部分,我选上了readwrite,那个只读和只写没必要了。另外前2个我也选了。点击Save保存。

创建gcdw用的bucket

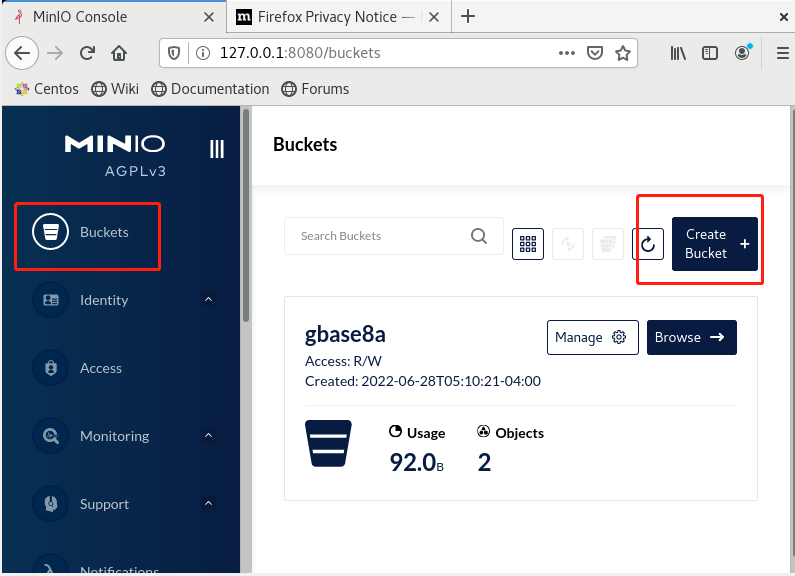

退出登录,用新创建的账号登录进去(本例是gbase8a)。

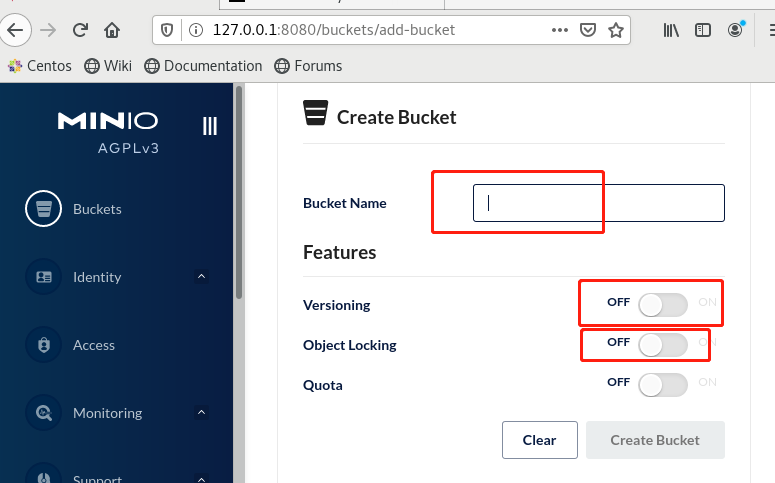

选择左侧的BUCKETS,然后create bucket。

名字只允许字母和数字,好像大写字母不行,必须是小写。底下的特性,除了限额,其它的我都开了,因为是只是测试数据库,不是minIO, 没测试限额。点击create bucket创建完成。

我们创建一个名字叫gbase 8a的bucket, 图参考上一节的,不小心,bucket的名字和用户名相同了。

FoundationDB元数据服务

本例使用的是10.0.2.210的redhat 7虚拟机。本文是单机,有关foundationDB的集群,请参考 GCDW元数据服务FoundationDB的集群模式配置和高可用测试

下载和安装

从下面地址下载服务端rmp

https://github.com/apple/foundationdb/releases?q=6.1.12&expanded=true

-rw-r--r--. 1 root root 18327552 Jun 28 17:57 foundationdb-server-6.3.24-1.el7.x86_64.rpm

然后安装即可

rpm -ivh oundationdb-server-6.3.24-1.el7.x86_64.rpm启动

通过systemctl 启动 foundationdb服务。

配置

默认服务只侦听了127.0.0.1的IP,修改配置文件/etc/foundationdb/fdb.cluster。将IP改成本机对外服务的IP

[root@rh7_210 foundationdb]# cat /etc/foundationdb/fdb.cluster

EVKz13zi:F78TwLJE@10.0.2.210:4500

[root@rh7_210 foundationdb]#数据库GCWD主机版安装

本例子将先在10.0.2.210上安装单节点集群,然后再扩容1台10.0.2.211节点。

准备

主机板采用了9.5.3相同的安装要求,重点是提前创建dbaUser,设置好安装目录的权限,运行SetSysEnv.py设置环境变量等。

另外,每个机器上要安装foundationDB的客户端。下载地址和前面安装服务器的相同。

-rw-r--r--. 1 root root 36210116 Jun 28 17:49 foundationdb-clients-6.3.24-1.el7.x86_64.rpm注意修改配置文件/etc/foundationdb/fdb.cluster。将IP改成本机对外服务的IP,设置服务IP为10.0.2.210,然后确认客户端可用。

[root@localhost foundationdb]# fdbcli

Using cluster file `fdb.cluster'.

The database is available.

Welcome to the fdbcli. For help, type `help'.

fdb> status

Using cluster file `fdb.cluster'.

Configuration:

Redundancy mode - single

Storage engine - memory-2

Coordinators - 1

Usable Regions - 1

Cluster:

FoundationDB processes - 1

Zones - 1

Machines - 1

Memory availability - 2.3 GB per process on machine with least available

>>>>> (WARNING: 4.0 GB recommended) <<<<<

Fault Tolerance - 0 machines

Server time - 06/30/22 10:59:39

Data:

Replication health - (Re)initializing automatic data distribution

Moving data - unknown (initializing)

Sum of key-value sizes - unknown

Disk space used - 111 MB

Operating space:

Storage server - 1.0 GB free on most full server

Log server - 21.1 GB free on most full server

Workload:

Read rate - 21 Hz

Write rate - 0 Hz

Transactions started - 5 Hz

Transactions committed - 0 Hz

Conflict rate - 0 Hz

Backup and DR:

Running backups - 0

Running DRs - 0

Client time: 06/30/22 10:59:39

fdb> exit

安装

demo.options配置文件

主机机版本的大部分参数和V953一致的,额外增加的参数。

- GCDW_S3_BUCKET=gbase8a 这个是创建的Bucket的名字

- GCDW_S3_ENDPOINT=http://10.0.2.181:9000 这个是S3的API的地址,看前面输出,8080是WEB端控制台,9000是API

- GCDW_S3_ACCESS_KEY_ID=gbase8a 登录S3的用户名, 前面创建的。本例没注意和bucket名字一样了,请注意区分哦。

- GCDW_S3_SECRET_KEY=XXXXXXX 登录S3的密码,前面创建的

- GCDW_S3_REGION='' 这个不懂,但看介绍可以设置为空,两个单引号括起来。

- gcluster_instance_name=gbase8a 实例的名字,可以随意填写,我也就索性又重复了一次

- instance_root_name=gbase 实例管理员的用户名。 租户名,好像主机版本没有多租户这一层了。

- instance_root_password=YYYYYYYYY 实例管理员的密码。租户密码

[gbase@rh7_210 gcinstall]$ cat demo.options

installPrefix= /opt

coordinateHost = 10.0.2.210

coordinateHostNodeID = 210

dataHost = 10.0.2.210

#existCoordinateHost =

#existDataHost =

#existGcwareHost=

gcwareHost = 10.0.2.210

gcwareHostNodeID = 210

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase1234'

GCDW_S3_BUCKET=gbase8a

GCDW_S3_ENDPOINT=http://10.0.2.181:9000

GCDW_S3_ACCESS_KEY_ID=gbase8a

GCDW_S3_SECRET_KEY=XXXXXXX

GCDW_S3_REGION=''

gcluster_instance_name=gbase8a

instance_root_name=gbase

instance_root_password=YYYYYYYYY

rootPwd = '111111'

#rootPwdFile = rootPwd.json

#characterSet = utf8

#dbPort = 5258

#sshPort = 22

[gbase@rh7_210 gcinstall]$安装过程

与953一样的过程。

[gbase@rh7_210 gcinstall]$ ./gcinstall.py --silent=demo.options

........

*********************************************************************************

Do you accept the above licence agreement ([Y,y]/[N,n])? y

*********************************************************************************

Welcome to install GBase products

*********************************************************************************

Environmental Checking on cluster nodes.

checking rpms ...

checking gconfig service

parse extendCfg.xml

CoordinateHost:

10.0.2.210

DataHost:

10.0.2.210

GcwareHost:

10.0.2.210

gcluster_instance_name:

gbase8a

instance_root_name:

gbase

Are you sure to install GCluster on these nodes ([Y,y]/[N,n])? y

。。。

10.0.2.210 install gcware and cluster on host 10.0.2.210 successfully.

adding user and nodes message to foundationdb

adding user and nodes message to foundationdb successfully

Starting all gcluster nodes ...

adding new datanodes to gcware ...

[gbase@rh7_210 gcinstall]$查看集群状态

退出重进是为了让环境变量生效。也可以用source /home/gbase/.gbase_profile生效。

可以看到1个管理节点,1个调度节点和1个FreeNode节点,0个warehouse。

[gbase@rh7_210 gcinstall]$ exit

exit

[root@rh7_210 gbase]# su gbase

[gbase@rh7_210 ~]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.2.210 | OPEN |

------------------------------------

====================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

====================================================

| NodeName | IpAddress | gcluster | DataState |

----------------------------------------------------

| coordinator1 | 10.0.2.210 | OPEN | 0 |

----------------------------------------------------

===========================================================

| GBASE CLUSTER FREE DATA NODE INFORMATION |

===========================================================

| NodeName | IpAddress | gnode | syncserver | DataState |

-----------------------------------------------------------

| FreeNode1 | 10.0.2.210 | OPEN | OPEN | 0 |

-----------------------------------------------------------

0 warehouse

1 coordinator node

1 free data node创建Warehouse

生成warehouse配置模板

[gbase@rh7_210 gcinstall]$ gcadmin createwh e wh.xml编辑warehouse配置

[gbase@rh7_210 gcinstall]$ vi wh.xml

[gbase@rh7_210 gcinstall]$ cat wh.xml

<?xml version='1.0' encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.210"/>

</rack>

<wh_name name="testwh"/>

<comment message="a warehouse for test"/>

</servers>

[gbase@rh7_210 gcinstall]$根据配置创建warehouse

[gbase@rh7_210 gcinstall]$ gcadmin createwh wh.xml

parse config file wh.xml

generate wh id: wh00001

add wh information to cluster

add nodes to wh

create gclusterdb.dual successful

gcadmin create wh [testwh] successful

[gbase@rh7_210 gcinstall]$查看warehouse

可以看到 1 warehouse

[gbase@rh7_210 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.2.210 | OPEN |

------------------------------------

====================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

====================================================

| NodeName | IpAddress | gcluster | DataState |

----------------------------------------------------

| coordinator1 | 10.0.2.210 | OPEN | 0 |

----------------------------------------------------

=======================================

| GBASE WAREHOUSE INFORMATION |

=======================================

| WhName | comment |

---------------------------------------

| testwh | a warehouse for test |

---------------------------------------

1 warehouse: testwh

1 coordinator node

0 free data node

[gbase@rh7_210 gcinstall]$查看warehouse详情,有1个计算节点。

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh

CLUSTER STATE: ACTIVE

=======================================

| GBASE WAREHOUSE INFORMATION |

=======================================

| WhName | comment |

---------------------------------------

| testwh | a warehouse for test |

---------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.210 | OPEN |

---------------------------------------------------------------

1 data node

[gbase@rh7_210 gcinstall]$设置默认warehouse

alter USER `admin` DEFAULT_WAREHOUSE = 'small'登录集群

登录帐号是前面demo.options配置的。

[gbase@rh7_210 gcinstall]$ gccli -h10.0.2.210 -ugbase -pYYYYYYYY

GBase client 9.8.0.2.11f94a815. Copyright (c) 2004-2022, GBase. All Rights Reserved.

gbase> use warehouse testwh;

Query OK, 0 rows affected (Elapsed: 00:00:00.00)

gbase> create database testdb;

Query OK, 1 row affected (Elapsed: 00:00:00.02)

gbase> use testdb;

Query OK, 0 rows affected (Elapsed: 00:00:00.01)

gbase> create table t1 as select now() d from dual;

Query OK, 1 row affected (Elapsed: 00:00:00.26)

gbase> select * from t1;

+---------------------+

| d |

+---------------------+

| 2022-06-29 09:14:25 |

+---------------------+

1 row in set (Elapsed: 00:00:00.05)

修改集群配置参数

怀疑是本测试版的安装脚本的BUG,其配置文件里的IP没有自动修改,后续新版本可能不需要这个步骤。

涉及gcluster的gbase_8a_gcluster.cnf和gnode的gbase_8a_gbase.cnf,将如下的配置行修改成正确的IP

gcluster_metadata_server_ip=192.168.1.1

====》

gcluster_metadata_server_ip=10.0.2.210扩容调度和计算资源

本次将10.0.2.211扩容为调度和计算节点。管理集群gcware当前版本不支持扩容。

注意,扩容调度资源,需要停集群服务。

准备

除了标准要求,安装foundationdb客户端外,还要将foundationdb的配置文件复制到新节点.

scp /etc/foundationdb/fdb.cluster 10.0.2.212:/etc/foundationdb/以新节点能运行fdbcli的status正常为准,参考前面的部分。

扩容配置文件

与953扩容相同,注意要增加数据库登录的用户名和密码,genDBUser和genDBPwd。

[gbase@rh7_210 gcinstall]$ cat demo_2.options

installPrefix= /opt

coordinateHost = 10.0.2.211

coordinateHostNodeID = 211

dataHost = 10.0.2.211

existCoordinateHost =10.0.2.210

existDataHost =10.0.2.210

existGcwareHost=10.0.2.210

#gcwareHost = 10.0.2.211

#gcwareHostNodeID = 211

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase1234'

GCDW_S3_BUCKET=gbase8a

GCDW_S3_ENDPOINT=http://10.0.2.181:9000

GCDW_S3_ACCESS_KEY_ID=gbase8a

GCDW_S3_SECRET_KEY=XXXXXXXX

GCDW_S3_REGION=''

gcluster_instance_name=gbase8a

instance_root_name=gbase

instance_root_password=YYYYYYYY

rootPwd = '111111'

genDBUser=gbase

genDBPwd=YYYYYYYY

#rootPwdFile = rootPwd.json

#characterSet = utf8

#dbPort = 5258

#sshPort = 22

[gbase@rh7_210 gcinstall]$

扩容过程

[gbase@rh7_210 gcinstall]$ ./gcinstall.py --silent=demo_2.options

*********************************************************************************

Thank you for choosing GBase product!

。。。。。

*********************************************************************************

Do you accept the above licence agreement ([Y,y]/[N,n])? ^Cy

*********************************************************************************

Welcome to install GBase products

*********************************************************************************

Environmental Checking on cluster nodes.

checking rpms ...

checking gconfig service

CoordinateHost:

10.0.2.211

DataHost:

10.0.2.211

gcluster_instance_name:

gbase8a

instance_root_name:

gbase

Are you sure to install GCluster on these nodes ([Y,y]/[N,n])? y

。。。。。

10.0.2.210 install cluster on host 10.0.2.210 successfully.

10.0.2.211 install cluster on host 10.0.2.211 successfully.

adding new coordinator nodes message to foundationdb

adding new coordinator nodes message to foundationdb successfully

update and sync configuration file...

sync cluster conf file ...

Starting all gcluster nodes ...

sync coordinator system tables ...

check database user and password ...

check database user and password successful

adding new datanodes to gcware ...

[gbase@rh7_210 gcinstall]$ 查看扩容后的集群

可以看到2个调度集群,1个FreeNode待用。

[gbase@rh7_210 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.2.210 | OPEN |

------------------------------------

====================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

====================================================

| NodeName | IpAddress | gcluster | DataState |

----------------------------------------------------

| coordinator1 | 10.0.2.210 | OPEN | 0 |

----------------------------------------------------

| coordinator2 | 10.0.2.211 | OPEN | 0 |

----------------------------------------------------

=======================================

| GBASE WAREHOUSE INFORMATION |

=======================================

| WhName | comment |

---------------------------------------

| testwh | a warehouse for test |

---------------------------------------

===========================================================

| GBASE CLUSTER FREE DATA NODE INFORMATION |

===========================================================

| NodeName | IpAddress | gnode | syncserver | DataState |

-----------------------------------------------------------

| FreeNode1 | 10.0.2.211 | OPEN | OPEN | 0 |

-----------------------------------------------------------

1 warehouse: testwh

2 coordinator node

1 free data node扩容warehouse

通过向现有warehouse增加节点来完成扩容过程。请参考后面扩容计算资源的对应章节。

扩容计算资源

在已有2个调度节点,2个计算节点的情况下,只扩容10.0.2.212为纯计算资源。前置要求一样,特别是foundationdb的客户端和其配置文件。参考前面准备章节。

配置文件

只增加了dataHost,其它的将现有的节点写上去就行了。

[gbase@rh7_210 gcinstall]$ cat demo_3.options

installPrefix= /opt

coordinateHost =

coordinateHostNodeID = 211

dataHost = 10.0.2.212

existCoordinateHost =10.0.2.210,10.0.2.211

existDataHost =10.0.2.210,10.0.2.211

existGcwareHost=10.0.2.210

#gcwareHost = 10.0.2.211

#gcwareHostNodeID = 211

dbaUser = gbase

dbaGroup = gbase

dbaPwd = 'gbase1234'

GCDW_S3_BUCKET=gbase8a

GCDW_S3_ENDPOINT=http://10.0.2.181:9000

GCDW_S3_ACCESS_KEY_ID=gbase8a

GCDW_S3_SECRET_KEY=XXXXXXXX

GCDW_S3_REGION=''

gcluster_instance_name=gbase8a

instance_root_name=gbase

instance_root_password=YYYYYYYY

rootPwd = '111111'

genDBUser=gbase

genDBPwd=YYYYYYYY

#rootPwdFile = rootPwd.json

#characterSet = utf8

#dbPort = 5258

#sshPort = 22

[gbase@rh7_210 gcinstall]$

扩容过程

./gcinstall.py --silent=demo_3.options -a

。。。

10.0.2.212 install cluster on host 10.0.2.212 successfully.

update and sync configuration file...

sync cluster conf file ...

Starting all gcluster nodes ...

adding new datanodes to gcware ...

[gbase@rh7_210 gcinstall]$

[gbase@rh7_210 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.2.210 | OPEN |

------------------------------------

====================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

====================================================

| NodeName | IpAddress | gcluster | DataState |

----------------------------------------------------

| coordinator1 | 10.0.2.210 | OPEN | 0 |

----------------------------------------------------

| coordinator2 | 10.0.2.211 | OPEN | 0 |

----------------------------------------------------

================================================

| GBASE WAREHOUSE INFORMATION |

================================================

| WhName | comment |

------------------------------------------------

| testwh2 | a warehouse for test to nodes |

------------------------------------------------

===========================================================

| GBASE CLUSTER FREE DATA NODE INFORMATION |

===========================================================

| NodeName | IpAddress | gnode | syncserver | DataState |

-----------------------------------------------------------

| FreeNode1 | 10.0.2.212 | OPEN | OPEN | 0 |

-----------------------------------------------------------

1 warehouse: testwh2

2 coordinator node

1 free data node

[gbase@rh7_210 gcinstall]$

扩容warehouse

通过gcadmin addnodes将计算节点加入现有warehouse

创建配置文件

[gbase@rh7_210 gcinstall]$ cp gcChangeInfo.xml gcChangeInfo_extend_212.xml

[gbase@rh7_210 gcinstall]$ vi gcChangeInfo_extend_212.xml

[gbase@rh7_210 gcinstall]$ cat gcChangeInfo_extend_212.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.212"/>

</rack>

</servers>

[gbase@rh7_210 gcinstall]$将新扩容的计算节点加入现有warehouse

[gbase@rh7_210 gcinstall]$ gcadmin addnodes gcChangeInfo_extend_212.xml testwh

gcadmin add nodes ...

flush statemachine success

gcadmin addnodes to wh [testwh] success

[gbase@rh7_210 gcinstall]$ gcadmin

CLUSTER STATE: ACTIVE

====================================

| GBASE GCWARE CLUSTER INFORMATION |

====================================

| NodeName | IpAddress | gcware |

------------------------------------

| gcware1 | 10.0.2.210 | OPEN |

------------------------------------

====================================================

| GBASE COORDINATOR CLUSTER INFORMATION |

====================================================

| NodeName | IpAddress | gcluster | DataState |

----------------------------------------------------

| coordinator1 | 10.0.2.210 | OPEN | 0 |

----------------------------------------------------

| coordinator2 | 10.0.2.211 | OPEN | 0 |

----------------------------------------------------

===================================================

| GBASE WAREHOUSE INFORMATION |

===================================================

| WhName | comment |

---------------------------------------------------

| testwh | a warehouse for test three nodes |

---------------------------------------------------

1 warehouse: testwh

2 coordinator node

0 free data node

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh

CLUSTER STATE: ACTIVE

===================================================

| GBASE WAREHOUSE INFORMATION |

===================================================

| WhName | comment |

---------------------------------------------------

| testwh | a warehouse for test three nodes |

---------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.210 | OPEN |

---------------------------------------------------------------

| node2 | 10.0.2.211 | OPEN |

---------------------------------------------------------------

| node3 | 10.0.2.212 | OPEN |

---------------------------------------------------------------

3 data node

[gbase@rh7_210 gcinstall]$

缩容warehouse

与扩容相反,用rmnodes将计算节点移出就行。如下只给出操作。

[gbase@rh7_210 gcinstall]$ gcadmin rmnodes gcChangeInfo_extend_212.xml testwh

gcadmin remove nodes ...

flush statemachine success

gcadmin rmnodes from wh [testwh] success

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh

CLUSTER STATE: ACTIVE

===================================================

| GBASE WAREHOUSE INFORMATION |

===================================================

| WhName | comment |

---------------------------------------------------

| testwh | a warehouse for test three nodes |

---------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.210 | OPEN |

---------------------------------------------------------------

| node2 | 10.0.2.211 | OPEN |

---------------------------------------------------------------

2 data node

[gbase@rh7_210 gcinstall]$

经测试,一个warehouse的计算资源是可以被完全移除的,使用时会报错,直到该warehouse至少有1个计算资源。

[gbase@rh7_210 gcinstalgcadmin rmnodes gcChangeInfo.xml testwh

gcadmin remove nodes ...

flush statemachine success

gcadmin rmnodes from wh [testwh] success

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh

CLUSTER STATE: ACTIVE

===================================================

| GBASE WAREHOUSE INFORMATION |

===================================================

| WhName | comment |

---------------------------------------------------

| testwh | a warehouse for test three nodes |

---------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

0 data node

[gbase@rh7_210 gcinstall]$

gbase> select * from wh1db.t1;

ERROR 1708 (HY000): (GBA-02EX-0004) Failed to get metadata:

DETAIL: Fail to Get GCWarehouse.

添加计算资源后,无需任何操作,查询自动恢复。

gbase> select * from wh1db.t1;

+-------+

| id |

+-------+

| 12345 |

| 2 |

| 2 |

| 2 |

| 2 |

+-------+

5 rows in set (Elapsed: 00:00:00.07)

gbase>

多Warehouse

我们将3个计算节点分成2个warehouse,testwh有1个节点,testwh2有2个节点。

配置文件

[gbase@rh7_210 gcinstall]$ cat wh1.xml

<?xml version='1.0' encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.212"/>

</rack>

<wh_name name="testwh1"/>

<comment message="a warehouse for test one nodes"/>

</servers>

[gbase@rh7_210 gcinstall]$ cat wh2.xml

<?xml version='1.0' encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.210"/>

<node ip="10.0.2.211"/>

</rack>

<wh_name name="testwh2"/>

<comment message="a warehouse for test two nodes"/>

</servers>

[gbase@rh7_210 gcinstall]$ 创建

[gbase@rh7_210 gcinstall]$ gcadmin createwh wh.xml

parse config file wh.xml

generate wh id: wh00008

add wh information to cluster

add nodes to wh

create gclusterdb.dual successful

gcadmin create wh [testwh1] successful

[gbase@rh7_210 gcinstall]$ gcadmin createwh wh2.xml

parse config file wh2.xml

generate wh id: wh00009

add wh information to cluster

add nodes to wh

gcadmin create wh [testwh2] successful

[gbase@rh7_210 gcinstall]$

查看效果

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh1

CLUSTER STATE: ACTIVE

=================================================

| GBASE WAREHOUSE INFORMATION |

=================================================

| WhName | comment |

-------------------------------------------------

| testwh1 | a warehouse for test one nodes |

-------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.212 | OPEN |

---------------------------------------------------------------

1 data node

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh2

CLUSTER STATE: ACTIVE

=================================================

| GBASE WAREHOUSE INFORMATION |

=================================================

| WhName | comment |

-------------------------------------------------

| testwh2 | a warehouse for test two nodes |

-------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.210 | OPEN |

---------------------------------------------------------------

| node2 | 10.0.2.211 | OPEN |

---------------------------------------------------------------

2 data node

计算资源调度

将211节点从testwh2迁移到testwh1,通过rmnode和addnode进行,如下只贴出执行过程。

[gbase@rh7_210 gcinstall]$ vi gcChangeInfo_211.xml

[gbase@rh7_210 gcinstall]$ cat gcChangeInfo_211.xml

<?xml version="1.0" encoding="utf-8"?>

<servers>

<rack>

<node ip="10.0.2.211"/>

</rack>

</servers>

[gbase@rh7_210 gcinstall]$ gcadmin rmnodes gcChangeInfo_211.xml testwh2

gcadmin remove nodes ...

flush statemachine success

gcadmin rmnodes from wh [testwh2] success

[gbase@rh7_210 gcinstall]$ gcadmin addnodes gcChangeInfo_211.xml testwh1

gcadmin add nodes ...

flush statemachine success

gcadmin addnodes to wh [testwh1] success

[gbase@rh7_210 gcinstall]$

迁移后的效果

testwh1的节点增加了211变成了2个。而testwh2剩下了一个。

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh1

CLUSTER STATE: ACTIVE

=================================================

| GBASE WAREHOUSE INFORMATION |

=================================================

| WhName | comment |

-------------------------------------------------

| testwh1 | a warehouse for test one nodes |

-------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.212 | OPEN |

---------------------------------------------------------------

| node2 | 10.0.2.211 | OPEN |

---------------------------------------------------------------

2 data node

[gbase@rh7_210 gcinstall]$ gcadmin showcluster wh testwh2

CLUSTER STATE: ACTIVE

=================================================

| GBASE WAREHOUSE INFORMATION |

=================================================

| WhName | comment |

-------------------------------------------------

| testwh2 | a warehouse for test two nodes |

-------------------------------------------------

===============================================================

| WAREHOUSE DATA NODE INFORMATION |

===============================================================

| NodeName | IpAddress | gnode |

---------------------------------------------------------------

| node1 | 10.0.2.210 | OPEN |

---------------------------------------------------------------

1 data node

[gbase@rh7_210 gcinstall]$

总结

在GBase GCDW存算分离【主机版里】,如果扩容调度集群(coordinator),集群需要重启服务,会影响业务运行。耗时主要在停服务和安装新节点服务。

如果只扩容计算资源,由于计算和存储分离,不再需要数据重分布,整个操作完全不影响现有业务的正常运行。耗时主要在安装新节点服务。

当有多个warehouse时,可以根据负载和业务要求,将节点计算资源灵活调度。

一般建议私有云或物理机用户数据量大,服务器较多时采用。就算实验环境,foundationDB, GCDW,基于高可用最少也需要每组3个节点吧,如果S3也需要单独部署而不是利用云上已有的,我觉得10?30?50台起步?